Diffusion-Based Learning of Contact Plans for Agile Locomotion

ATARI Lab, Technical University Munich

Published at 2024 Humanoids

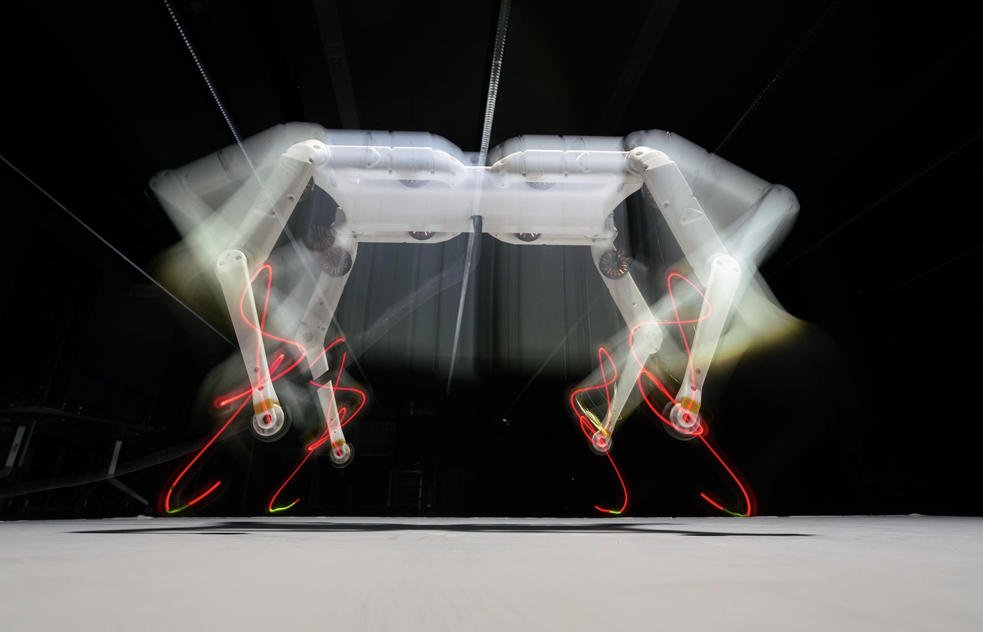

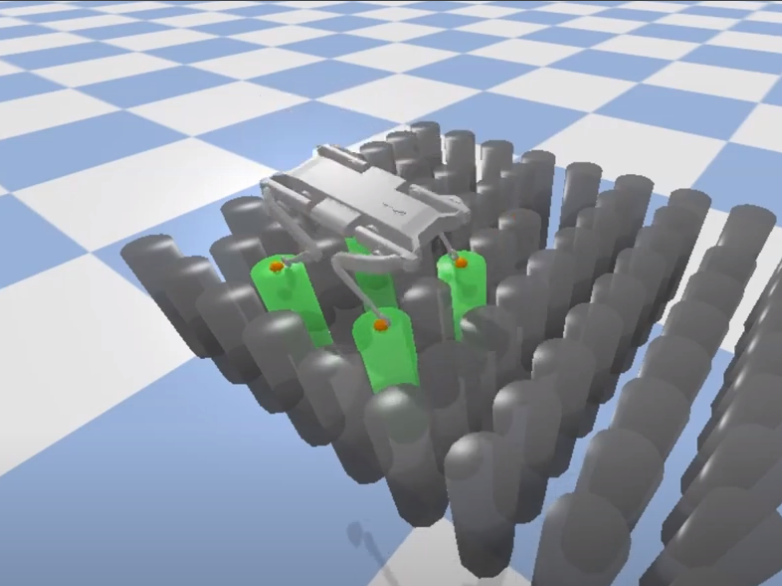

Legged robots have become capable of performing highly dynamic maneuvers in the past few years. However, agile locomotion in highly constrained environments such as stepping stones is still a challenge. In this paper, we propose a combination of model-based control, search, and learning to design efficient control policies for agile locomotion on stepping stones. In our framework, we use nonlinear model predictive control (NMPC) to generate whole-body motions for a given contact plan. To efficiently search for an optimal contact plan, we propose to use Monte Carlo tree search (MCTS). While the combination of MCTS and NMPC can quickly find a feasible plan for a given environment (a few seconds), it is not yet suitable to be used as a reactive policy.

Hence, we generate a dataset for optimal goal-conditioned policy for a given scene and learn it through supervised learning. In particular, we leverage the power of diffusion models in handling multi-modality in the dataset. We test our proposed framework on a scenario where our quadruped robot Solo12 successfully jumps to different goals in a highly constrained environment

Visual-Inertial and Leg Odometry Fusion for Dynamic Locomotion

Max Planck Institute for Intelligent Systems, Tuebingen

Published at 2023 IEEE International Conference on Robotics and Automation

Legged robots have become capable of performing highly dynamic maneuvers in the past few years. However, agile locomotion in highly constrained environments such as stepping stones is still a challenge. In this paper, we propose a combination of model-based control, search, and learning to design efficient control policies for agile locomotion on stepping stones. In our framework, we use nonlinear model predictive control (NMPC) to generate whole-body motions for a given contact plan. To efficiently search for an optimal contact plan, we propose to use Monte Carlo tree search (MCTS). While the combination of MCTS and NMPC can quickly find a feasible plan for a given environment (a few seconds), it is not yet suitable to be used as a reactive policy. Hence, we generate a dataset for optimal goal-conditioned policy for a given scene and learn it through supervised learning. In particular, we leverage the power of diffusion models in handling multi-modality in the dataset. We test our proposed framework on a scenario where our quadruped robot Solo12 successfully jumps to different goals in a highly constrained environment

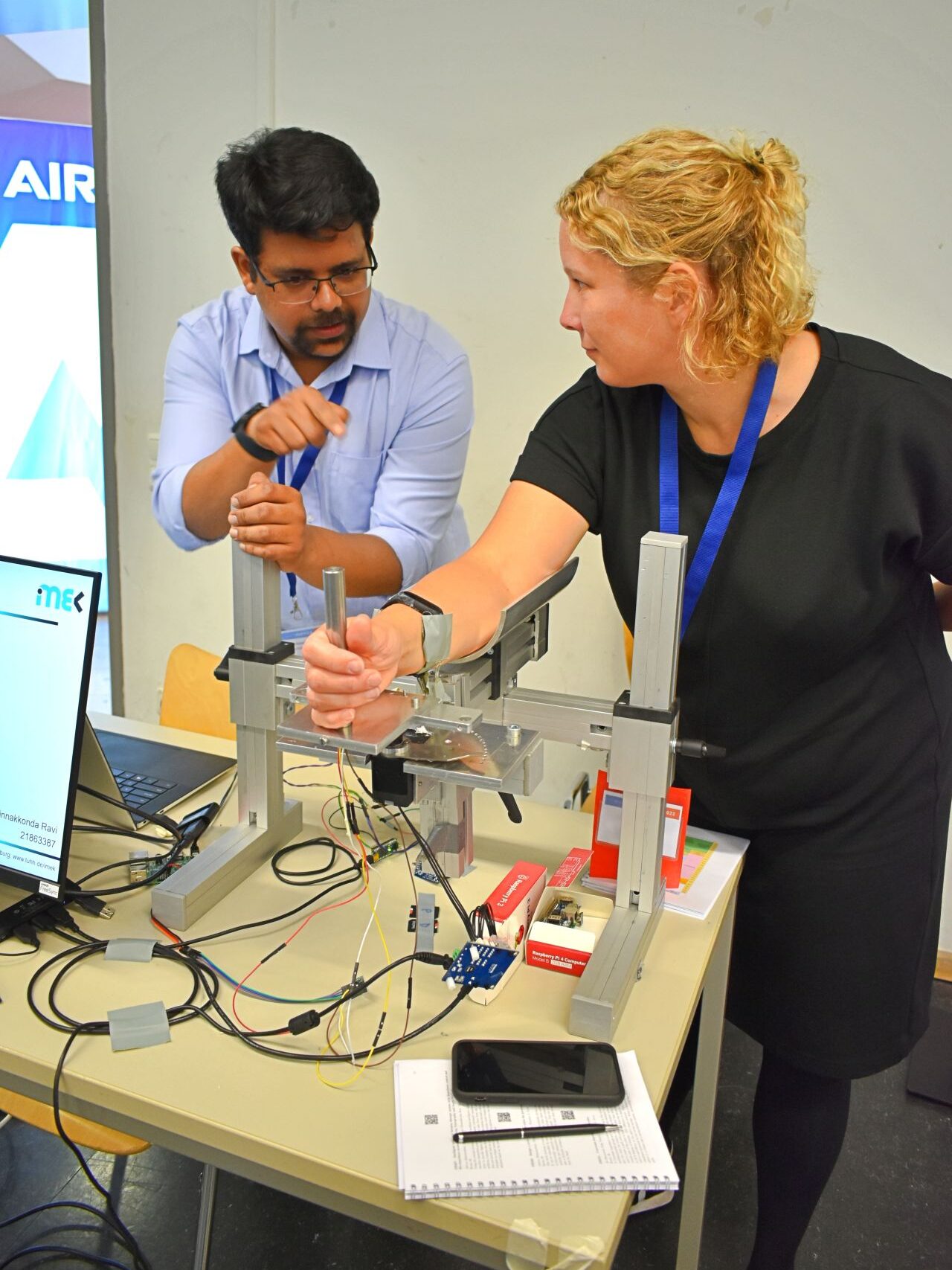

Development of a New Control System for a Rehabilitation Robot Using Electrical Impedance Tomography and Artificial Intelligence

Technical University Hamburg, Germany

Published at 2023 Intelligent Human-Robot Interaction, Biomimetics

This study presents a tomography-based control system for a rehabilitation robot, utilizing a dynamic model that incorporates the torque generated by the robot and the patient’s hand impedance to guide rehabilitation steps. A regression model, based on tomography signal analysis, estimates muscle states to adjust the torque applied by the robot during sessions. The protocol involves two main steps:

calculating subject-specific parameters (e.g., axis offset, inertia, damping, stiffness) and identifying interaction-related torque. Testing on participants demonstrated promising results, with impedance–position prediction errors below 2% and effective individualized force and position control across varying patient impedances.

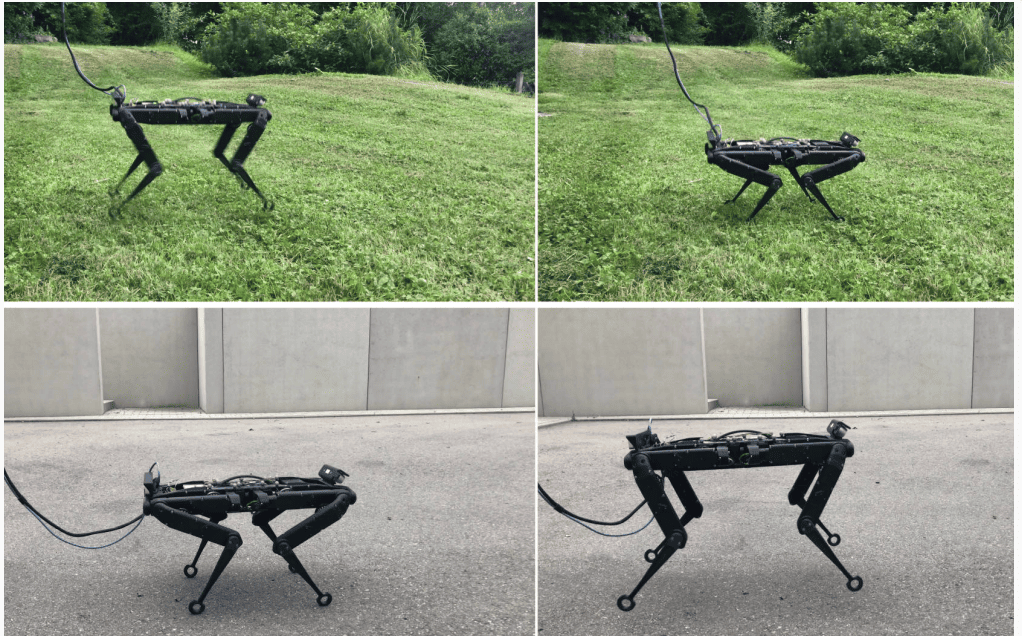

Model-free Reinforcement Learning for

Robust Locomotion for 12 DoF Legged Robots

Max Planck Institute for Intelligent Systems, Tuebingen

Published at 2023 Intelligent Human-Robot Interaction, Biomimetics

Adaptation and testing of a two-stage reinforcement learning framework were carried out on the 12-DoF quadruped robot, Solo 12. The approach utilized a single demonstration generated through trajectory optimization to initiate exploration, followed by direct optimization of task-specific rewards to develop policies resilient to environmental uncertainties. The work involved customizing the framework for Solo 12’s unique kinematics and dynamics, refining reward functions, addressing hardware constraints, and validating the system on dynamic tasks such as hopping and bounding, ensuring robust and reliable real-world performance.